Latest from GetReal

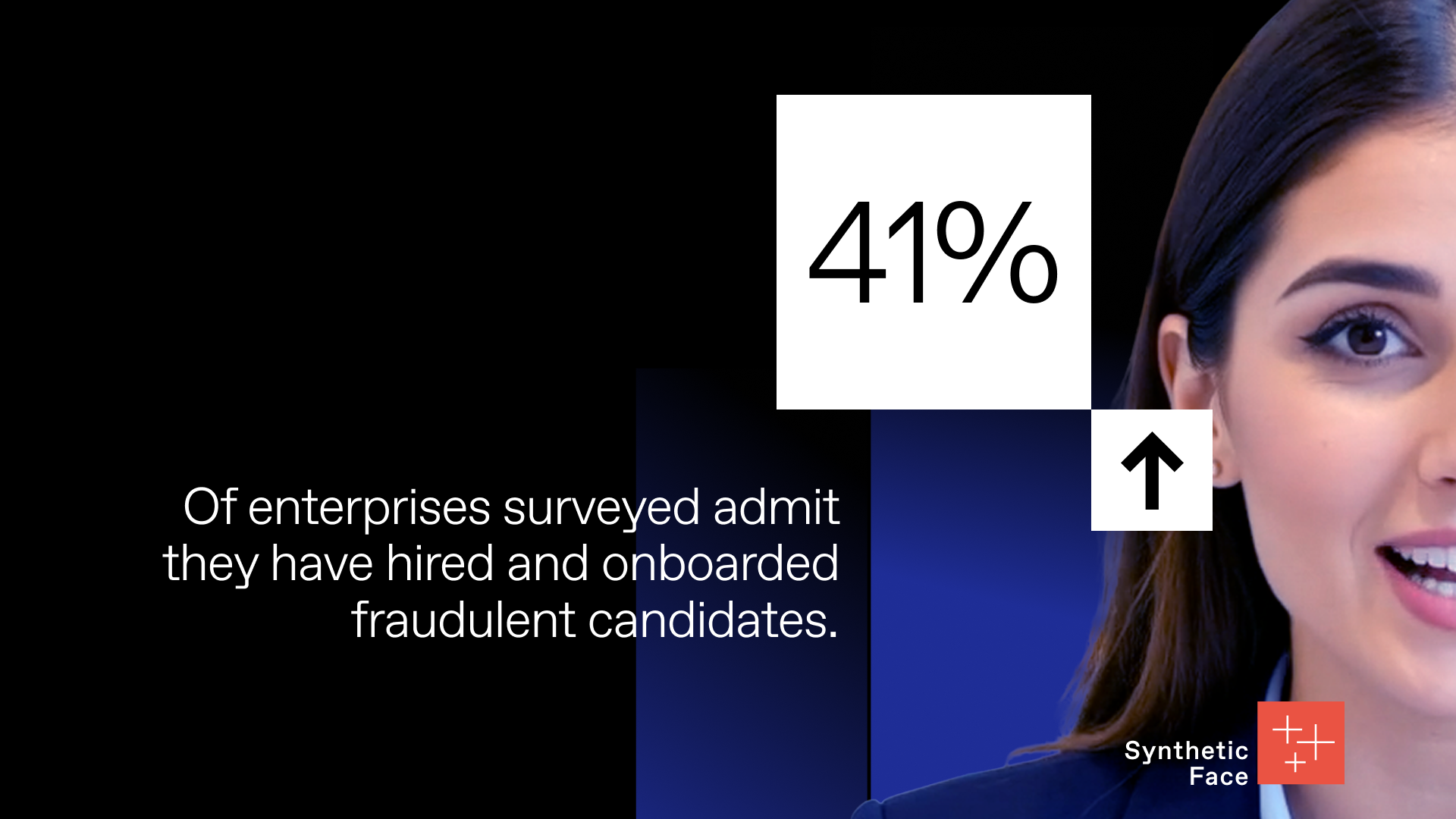

Deepfakes and synthetic identities are already slipping past interviews, background checks, and onboarding. Our Deepfake Readiness Benchmark Report reveals how exposed enterprises really are – and how prepared they are to detect and stop AI-powered identity attacks.

February 19, 2026

All

Blog

Threat Intelligence

February 10, 2026

All

Blog

Deepfakes

Threat Intelligence

Blog

February 2026

In the past, IT service help desks have counted on multi-factor authentication (MFA), biometrics, caller ID, training, scripts, escalation, and policies to prevent social engineering. But when employee and customer identity can be impersonated at scale, those approaches are no longer sufficient.

February 9, 2026

All

Whitepapers

Imposter Hiring

February 2, 2026

All

Blog

Deepfakes

January 23, 2026

All

Blog

Imposter Hiring

Blog

January 2026

North Korea’s remote IT worker operations are not slowing down—they’re succeeding. This post explains why traditional hiring and background checks fail against highly organized DPRK campaigns, and why organizations must rethink hiring through a zero-trust, human capital supply chain lens.

January 21, 2026

All

Video

Deepfakes

AI

Video

January 2026

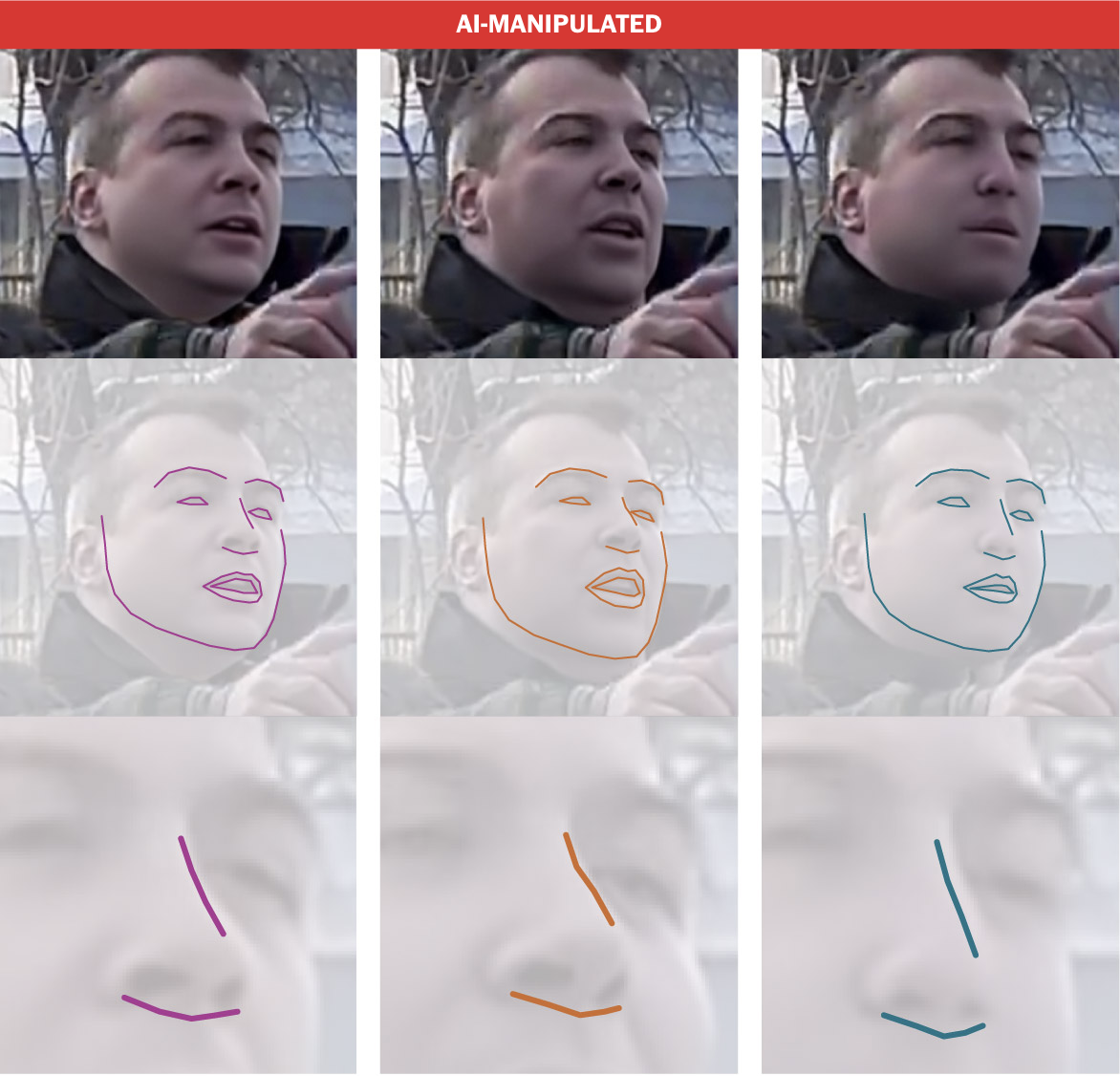

Kling’s motion control gives bad actors the ability to generate fully embodied impersonations from a single image. By removing the mismatch between face, body, and movement, this capability eliminates a key friction point that previously exposed many deepfake scams. The result is scalable impersonation and a fundamental shift in who and what we can trust online.

January 13, 2026

All

Report

Deepfakes

January 9, 2026

All

News

Deepfakes

January 9, 2026

All

News

AI

January 5, 2026

All

Video

Deepfakes

AI

December 21, 2025

All

News

AI

Deepfakes

December 21, 2025

All

News

Deepfakes

Threat Intelligence

News

December 2025

We're constantly seeing examples of real-world social engineering attacks using GenAI-powered voice impersonation. On Friday, the FBI issued a new warning that cybercriminals are successfully impersonating senior U.S. government officials with faked voice messages and texts, often exploiting familiarity and urgency to gain trust.

December 18, 2025

All

Video

Deepfakes

Video

December 2025

As recruiting moved online, a new class of adversary followed – using AI to fabricate identities, pass interviews, and infiltrate companies at scale. In some cases, these aren’t just scammers. They’re nation-state operatives. In this investigation, we look at how deepfake candidates are already moving through enterprise hiring pipelines – and why traditional checks no longer work.

00:07:57

December 15, 2025

All

News

Deepfakes

December 11, 2025

All

Report

December 11, 2025

All

Press Release

Deepfakes

November 21, 2025

All

Blog

Deepfakes

November 17, 2025

All

Video

Deepfakes

AI

November 3, 2025

All

News

Deepfakes

AI

No resources available

Lorem ipsum

Click here to clear the filters

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

.avif)