Related

Today’s Fight Against Malicious Digital Media: Reflections on the Content Authenticity Summit 2025

10 minute read

In January 2020, I attended a small meeting at Adobe headquarters in San Jose, California. Adobe was kicking off the Content Authenticity Initiative (CAI) with the goal of developing an industry-wide standard for digital content attribution.

At the day-long event co-sponsored by The New York Times Company and Twitter, we discussed all aspects of content authentication, from creation to weaponization and detection. Malicious deepfakes still had not yet been fully realized, but throughout the day, we heard a lot about rising trends and what effective protections might look like against this looming threat.

By the end of the day, a roadmap for the CAI had been laid out, focusing on authenticating content at the point of recording or creation, including cryptographically signed metadata, robust watermarks and robust digital signatures.

If you had asked me at the time, I would have told you: Well that was fun and interesting, but this isn’t going anywhere. I’m delighted to say that I was wrong. Here we are, five years later, and I just returned from giving the keynote at the fourth CAI summit hosted at Cornell Tech in New York City.

Where We Stand Today

A lot has changed in the intervening five years. AI-generated images and voices are now nearly indistinguishable from reality, and video is not far behind. At the same time, the weaponization of deepfakes and AI-powered identity theft has increased, impacting individuals, organizations, and democracies. And social media made a hard turn away from responsible content moderation (including Twitter, which has since rebranded as X).

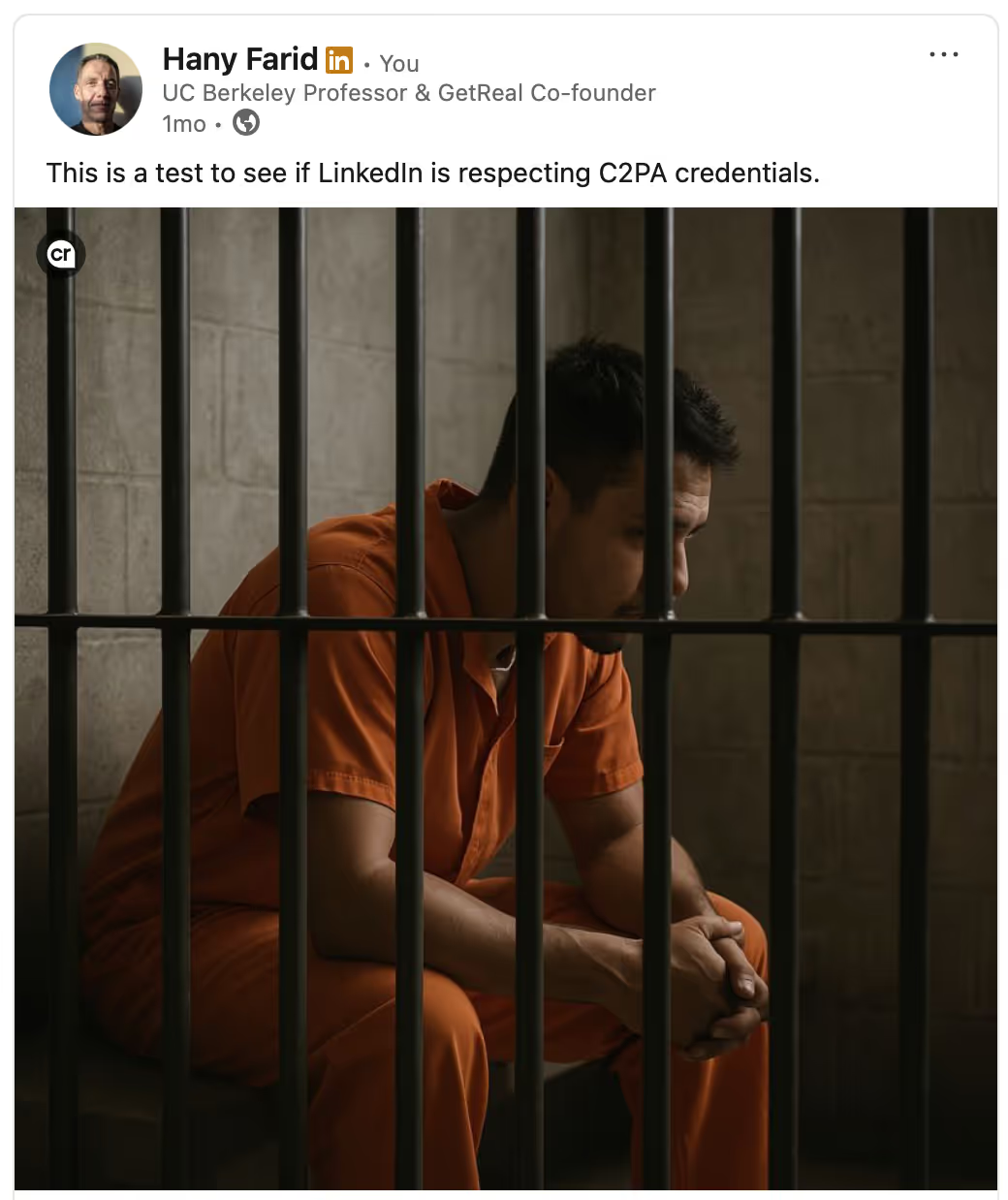

During these five years, the CAI also made tremendous strides in terms of formalizing and deploying technology largely in line with the original vision. Today, for example, if you generate an image using ChatGPT, the image metadata will contain a cryptographically signed credential. If this image is then uploaded to LinkedIn, the credential will be read, verified and a small “cr” symbol will appear in the upper-left corner. Clicking on this credential will provide the provenance information for the image.

Because metadata can, intentionally or unintentionally, be stripped from an image, storing a credential solely in the metadata is vulnerable to manipulation. To augment these credentials, imperceptible watermarks like TrustMark and SynthID have also begun to be deployed.

Industry-led standards like the CAI are fantastic, and I’ve been impressed with how much progress has been made in just five years. But, even though they are absolutely part of the solution, those leading the charge will tell you that content credentials don’t eliminate the threat posed by deepfakes.

That’s why the forensic techniques that I have been developing as an academic for the past 25 years, and those we are refining and expanding at GetReal, are so critical in today’s fight against malicious AI-generated media.

The Threat Landscape is Changing

We are quickly entering a world where we need to ask a whole host of new questions about who we interact with online: Are they AI-generated or not? If they are AI-generated, is their likeness authorized by the true identity, and is their intent malicious?

If they are not AI-generated, we may still need to verify their identity, as we’ve discovered recently with various forms of impostor hiring. And, as AI is creeping into all aspects of the digital pipeline, even the question of whether something is natural or AI-generated will become more nuanced.

The overriding takeaway message from the CAI Summit is that authenticity and trust are no longer a bespoke, quirky academic field. It is impacting nearly everyone and every industry, and those on the front lines are starved for reliable solutions.

Learn more about how GetReal is restoring trust in digital content.