Related

How Deepfake CCTV Videos Are Misleading Millions on Social Media

10 minute read

A new wave of AI-generated videos designed to mimic CCTV and home surveillance footage is spreading rapidly across social media, drawing millions of views.

From toddlers riding dogs through hallways to backyard wildlife caught on “camera,” these synthetic viral clips may seem harmless - even silly - but they’re intentionally crafted to mislead viewers into believing the footage is real.

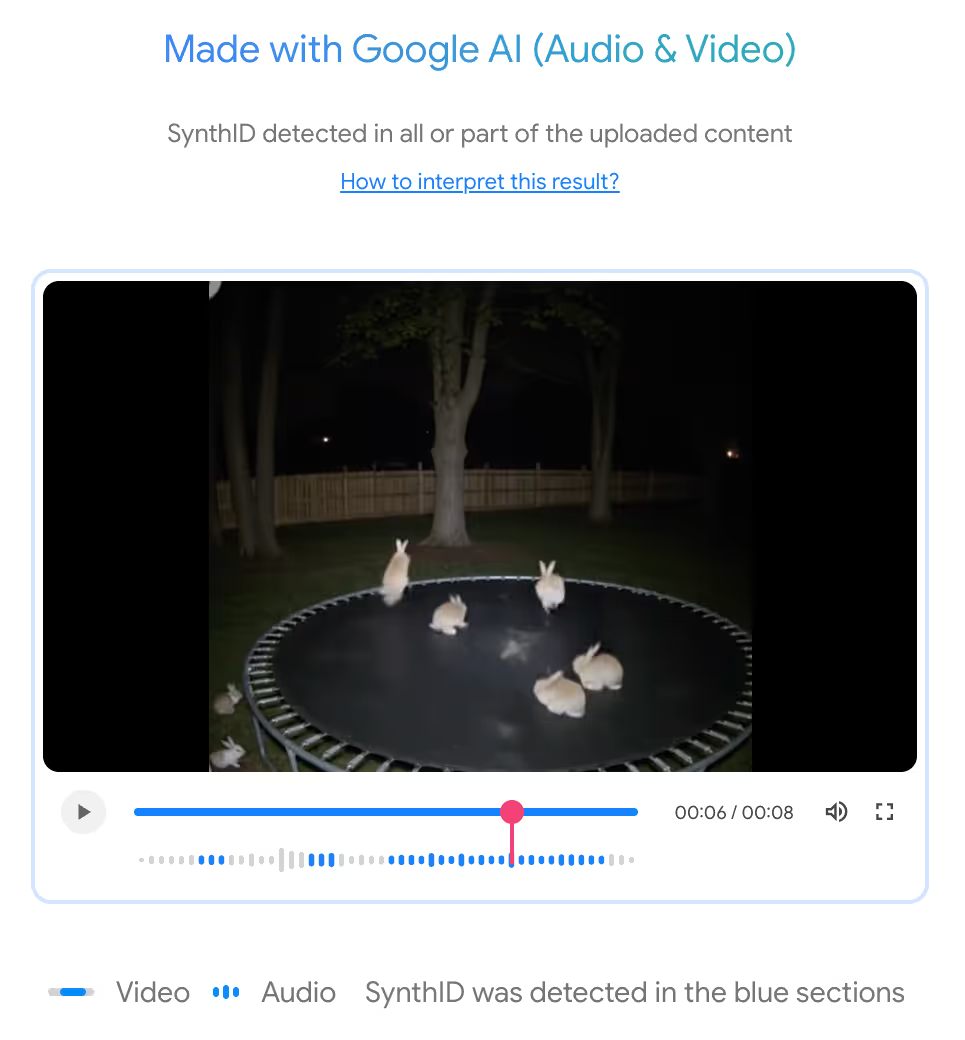

“I wanted it to feel real…like something you might actually catch on your home security camera. So I used a typical backyard setting. A fence, some trees, a trampoline,” admitted Andrew K., the creator of the “bunnies jumping on a trampoline” video.

Andrew, a marketing strategy intern, confirmed to GetReal that he made the video using a combination of Google Veo3, Gemini, Chat GPT and Canva. To push the illusion even further, he published the video under a fake alias, posing as a middle-aged woman named Rachel on TikTok to lean into plausibility.

In a LinkedIn post, he later revealed it was all part of an experiment to test what content would drive views and engagement. The video looked real enough to fool most viewers, until one bunny mysteriously vanished mid-jump.

“That single moment sent thousands to the comments to debate what they saw. Was it fake? Was it a glitch? Is this AI? That curiosity only boosted engagement, driving more views, more shares, and more discussion,” he added in his online post.

Why This Matters: Visual Trust Under Attack

For years, CCTV footage has served as trusted visual evidence in everything from criminal investigations to exposing abuse and to holding institutions accountable. It’s considered one of the most believable formats in journalism and public discourse.

And now, we’ve crossed a new line. One where false “proof” can be generated in minutes using easily accessible AI tools and passed off as real with no visual cues or disclaimers. And there’s little to no friction between creation and mass distribution.

In the case of the bouncing bunnies, we were able to extract an invisible SynthID watermark, which confirmed that this video is AI-generated. But, because not all generative-AI models watermark their content, this type of signal cannot always be relied upon.

An Emerging Threat

This is an emerging attack vector: one that targets visual trust and exploits the assumption that “camera footage doesn’t lie.”

Now, imagine what happens when this technology is used with intent to: frame an executive, discredit a political figure, or manufacture “evidence” for use in a court case.

Without clear on platform labeling and robust detection technology, the risk is enormous.

We are entering a time when even the most trusted video formats require technology and forensic-level analysis. That level of authentication will soon be essential, especially as synthetic footage begins to surface in legal proceedings.