Related

Hiring Kill Chain: Securing the Front Door in a GenAI World

10 minute read

Hiring Kill Chain. The term itself says it all about the hyperconnected, end-user-centric and increasingly AI-driven world we live in today. As we steadily march toward fulfilling the promises of Web 3.0, the areas of identity and privacy are moving in the opposite direction.

Yes, nation-state espionage and insider threats have been around before the term “cybersecurity” was coined, but the thought of adversaries weaponizing Human Resources? That once seemed remote. But it shouldn’t be that big of a surprise.

While HR has historically been involved in physical security conversations (think badges), most of the real discussion of security risk and identity strategy has focused on customers, employees, and contractors, rather than job candidates. So, with the lion’s share of our attention and dollars focused on protecting IT Infrastructure (the proverbial back door), adversaries realized that most companies’ front doors were left ajar in this era of GenAI and deepfakes.

It is undeniable that AI and physical imposter job candidates are on the dramatic rise, so much so that Gartner Research predicts that by 2028, over 25% of all candidates will be fake or imposters. This represents a significant expansion of risk for enterprises, our country, and our allies, as the global war for talent now encompasses a malicious front.

I recently wrote a piece for Fast Company, outlining these exact dangers—how interview processes and HR departments now serve as a low-friction entry point for foreign adversaries and nation-state actors, such as North Korea, which are already leveraging deepfake technology to penetrate the defenses of the Global 2000.

As deepfakes become increasingly easier to create and deploy, we must consider the broader implications of today’s threat landscape and how threat actors will exploit any business process that has been digitized over the past two decades of digital transformation.

To this end, it is critical that we apply “red team thinking” to every single business process that could be susceptible to deception associated with weaponized digital content. Whether it takes the form of a static profile picture or a real-time interaction with a bag-of-bits that looks like a trusted colleague or an attractive job candidate, every critical business process should apply the concept of the Kill Chain from front to back. We must be mindful of potential compromises that could arise from the consumption of malicious digital content.

The Hiring Kill Chain

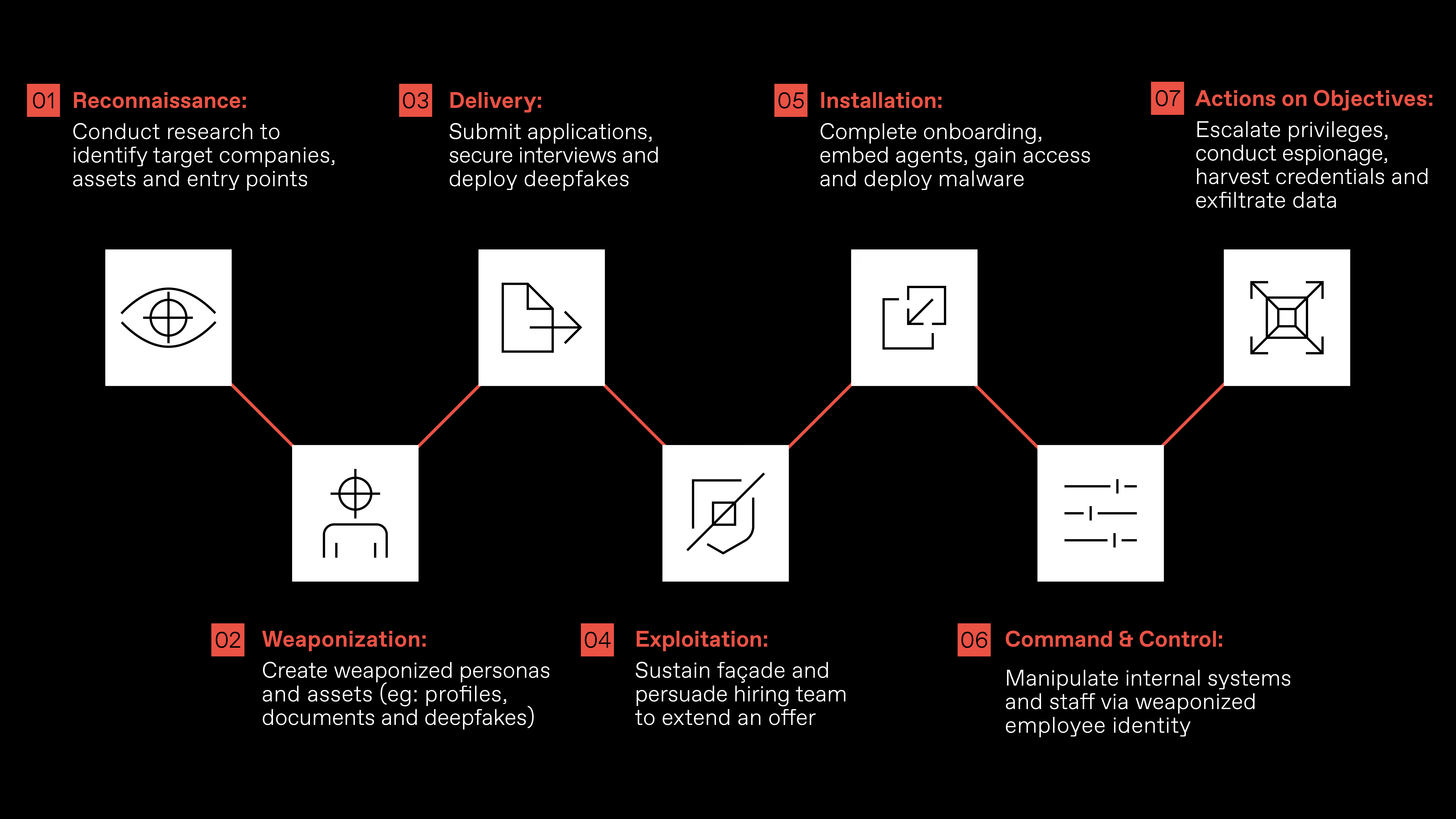

So, what is the Hiring Kill Chain? It is simply the application of the well-known Kill Chain concept coined by Lockheed Martin in 2011 to the HR recruitment and hiring process. It is a methodical, phased-based approach for business, cyber, and HR professionals to understand and visualize how adversaries attempt to attack Human Resources to penetrate and compromise your organization. Below is a brief description of each of the seven stages, along with some of the tactics adversaries are using at each step of the attack.

Phase 1: RECONNAISSANCE

In this initial phase, the adversary conducts research on an organization’s employees, assets, and intellectual property. They explore job postings on the company website or Indeed and attempt to determine what HR systems are used internally (e.g., Workday, Greenhouse). There is considerable effort to deeply understand the hiring process at each step along the way:

- How many interviews?

- Who is involved?

- What is the background of the hiring managers?

- Who are their family members?

This is the all-important intelligence gathering phase to make the fake candidate appear well-versed and vested in the process. And they are - just for the wrong reasons.

Phase 2: WEAPONIZATION

In this phase, the adversary will create weaponized personas in the form of GenAI deepfakes and/or recruit “look-alike” accomplices to take interviews or be available to operate behind enemy lines once the candidate is hired. In many cases, the look-alikes have been members of the same family.

The adversary will also fabricate key assets such as fake or modified LinkedIn profiles, fake resumes, and any false identity documents the company may require as part of the initial verification or final onboarding process.

Phase 3: DELIVERY

During this phase, the adversary deploys the fabricated assets against the HR process, starting with the submission of a fake resume that includes links to fake LinkedIn accounts, freelance marketplaces (e.g., Upwork), or other social media assets. Once an interview is secured, the adversary will deploy deepfakes in several different scenarios.

Some candidates may be fake from the initial video interview if they are sophisticated in the use of deepfake tools. Others may insert a deepfake during particularly challenging parts of the interview, like technical evaluations, or simply drop a look-alike into an interview if the interviewers have changed. The adversary will leverage anything fake along the way to make it to the final selection process.

Phase 4: EXPLOITATION

Once inside the HR interview process, the adversary will take a broader set of actions to both sustain their facade and expand their reach and information gathering within the enterprise. Such actions include having fake and fabricated references proactively reach out to hiring managers and sending out thank-you notes to all interviewers from fake email accounts to develop a rapport with influencers in the process and future co-workers who will be targeted post-hire.

The goal here is to impress the hiring team beyond expectations with social engagement that is often lacking among today’s Gen Z candidates, all aimed at convincing the organization to extend an offer.

Phase 5: INSTALLATION

Once the hiring decision has been made, the organization typically moves to the onboarding process. This typically involves an identity verification process where the adversary presents fake or manipulated content digitally or physically. In many cases, the entire process is digital, including the provisioning of credentials to key corporate systems.

Once this is complete, the adversary has gained a foothold in the organization and begins to gain broader access through privileged escalation and incremental requests to company systems. In many cases, malware is deployed internally to broaden its reach and access to information.

Phase 6: COMMAND & CONTROL

During this phase, the adversary begins to manipulate internal systems and colleagues with their weaponized identity. Depending on their sophistication, the impostor will be selective about whether to show up in Zoom calls with camera and voice on, as they attempt to hide in large, globally distributed matrixed organizations.

Phase 7: ACTIONS ON OBJECTIVES

Once firmly embedded and accepted within the organization, in many cases by being model colleagues and doing exceptional work, the adversary will begin to execute its agenda, typically consisting of financial fraud, data and IP exfiltration, and other actions harmful to the business. In many cases, the objectives of nation-states or criminal organizations could be as simple as collecting paychecks across one or multiple companies and wiring the funds to finance the organization’s mission.

The “Kill Chain” is certainly not a new concept, but it has not historically been associated with “non-traditional cyber targets” like Human Resources. I would encourage all organizations to apply Kill Chain thinking and planning to any mission-critical business process in their company and their extended partner ecosystem. While imposters and deepfakes are certainly concerning developments with the adoption of GenAI, these are not the only threats that have emerged. We must be prepared for what comes next.

Shadow IDs and the Rise of Deep Synthetics are Next

Given the current state of GenAI, we are witnessing the rapid creation and deployment of deepfakes across various media types, including images, audio, and video files, as well as real-time communication streams on collaboration platforms such as Zoom and Microsoft Teams. The technology has advanced so rapidly that by the end of the year, it will likely be chance odds as to whether anyone, including the technically sophisticated, will be able to discern legitimate from illegitimate content.

Making this trend even more troubling are the many legitimate use cases associated with Avatars, including driving engagement with marketing audiences, speaking a foreign language with your team, or, in some cases, being in two places at once to help your organization scale. Not knowing what is real or not real has legitimate impacts on the trust model of organizations, depending on the audience.

Add to this the rise of Shadow IDs, as we call them at GetReal, in the form of apps like Pickle that allow employees to conceal their location or their dress while at work. While these are the legitimate images and likenesses of the employee, they do not capture the reality of the moment. What may be playful and downright innocuous could also be viewed as deceptive, depending on the circumstances.

Lastly, there are what we call at GetReal “DeepSynthetics,” which are synthetic identities with a deepfake image and likeness wrapped around it. Synthetic Identities have been a problem for the financial industry for years. They have become even scarier with the advent of deepfake technology, which allows them to engage in a broader set of scenarios depending on the verification and authentication schemes in question. This problem will also extend to agentic AI, a subject area we are closely monitoring.

Explore our solutions that stop digital deception at the front door.