Stop Fake Job Applications

Real-time detection & mitigation of fraudulent job candidates who use deepfakes and deception in remote interviews.

Still think it won’t happen to you?

Gartner

GetReal Security-sponsored survey research

The Costs of Imposter Hiring and Job Candidate Fraud

Fraudulent job candidates use AI-generated content and live deepfakes to gain employment under false pretenses and embed themselves as insider threats.

Cybersecurity risk

Imposters harvest credentials, steal intellectual property, exfiltrate data, and install backdoors

Financial risk

An imposter may engage in payments fraud or extort the company

Compliance risk

Fines, breach of contract, breach notifications

Brand damage / reputational risk

News of a fraudulent hire may breed doubt in customers, employees, and investors

Understand Best Practices for Remote Hiring to Stop Fraud

Stopping Fake Job Applications Early in the Hiring Process

Detecting a candidate’s use of synthetic audio and video in an interview, or matching them against do-not-hire or known-imposter lists stops adversaries before they’re onboarded.

Mitigate risk

Catch and reject fraudulent job candidates before they infiltrate your corporate systems

Protect IP theft and finances

Prevent theft and extortion attempts resulting from imposter hiring

Reduce hiring costs and time-to-hire

Filter out deceptive applicants early to avoid wasted time interviewing imposters and re-hiring

Don’t hire another imposter, save time and money by identifying and removing deceptive job candidates early in the hiring process.

Essential Capabilities to Stop Hiring Fraud

Teams and functions must come together to stop hiring fraud, requiring a solution that serves the needs of multiple stakeholders.

Interview fraud monitoring

Teams need automatic screening of videoconference interviewees to detect imposters in real time

Easy to use

Interviewers need a frictionless experience and cybersecurity analysts need specific, actionable intel to investigate

Fast detection

Every second spent with an adversary on an interview increases risk and wasted resources

Evidence-backed results

Users need to trust alerts, and legal teams must be assured only the necessary evidence is collected

Identify imposters, not only deepfakes

Not all imposters use deepfakes and complete protection must account for other tactics

See How GetReal Protect Disrupts Imposters Before Onboarding

How to Tell if a Job Applicant is Fake (You Probably Can’t)

GenAI-powered deepfake tools outpace manual checks, so reliably spotting an imposter in a videoconference interview only gets more difficult.

- Many real-time deepfake tools can no longer be defeated by asking an interviewee to wave their hand in front of their face

- Syncing between the mouth of a synthesized face and the transmitted audio continues to improve

- Many imposters (including fake DPRK candidates) do not make use of real-time deepfakes

Because humans can’t reliably spot every imposter, technology is required to scale protection.

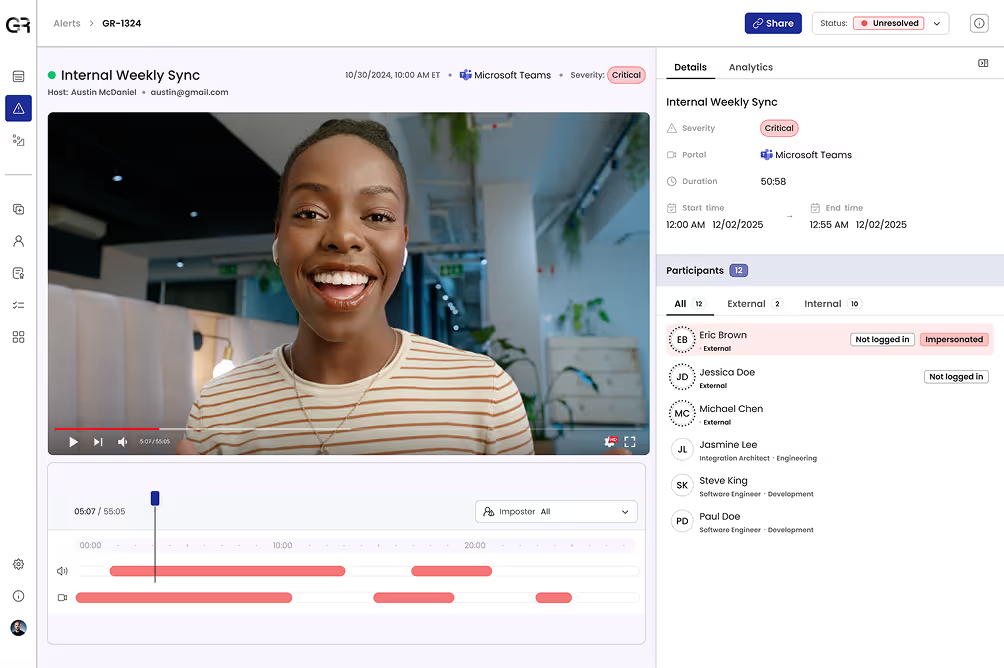

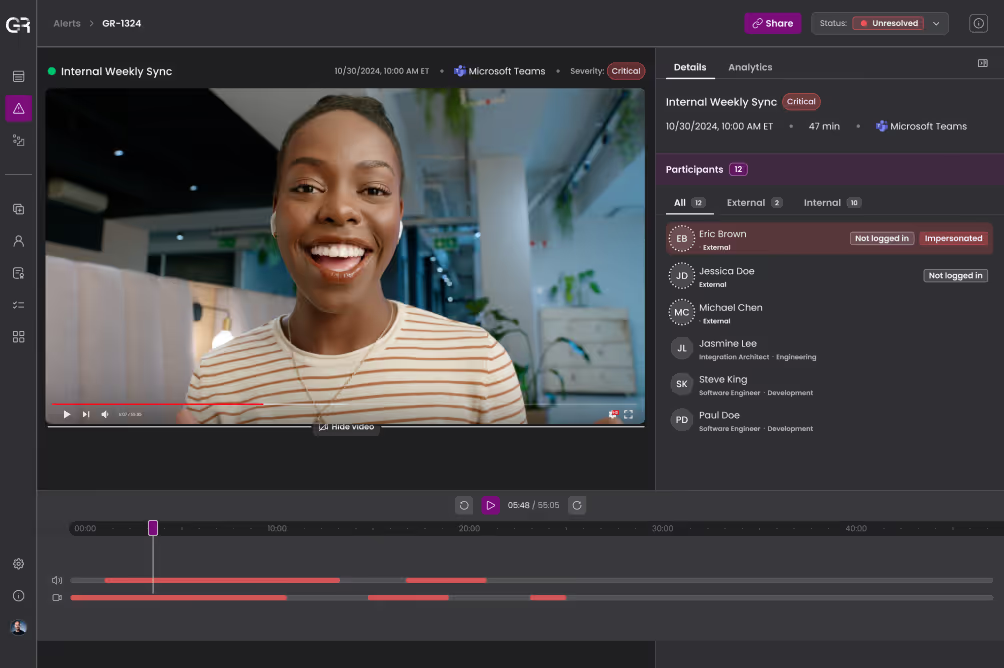

Interview Fraud Detection: Evidence, Not Probability

Applying AI to candidate fraud protection is necessary for scale, but is not enough on its own. Pairing machine learning with digital forensics ensures detections are accurate and explainable, rather than just a probability score that investigators may not trust, delaying response.

Reverse-engineering attacker tools

Analyzing the toolchain used to create deepfakes to understand how they operate

Identifying forensic artifacts

Understand the subtle traces synthesis leaves behind while distinguishing them from processing done by videoconferencing platforms

Monitoring grounded in forensics

Train detection models on forensic signals to reduce false positives and increase accuracy

Identity-centric detection

Monitor not only for candidate use of deepfakes, but also known imposters or individuals on do-not-hire lists

Try GetReal Protect Free and Stop Deepfake Interviews

Trustworthy detection in seconds

with message prompts to hosts and response actions that don’t burden end-users

Forensics-grounded alerts

that stand up to scrutiny and provide “at-the-scene-of-the-crime” evidence of the impersonation and what was discussed

Frictionless end-user experience

with intuitive investigation workflows and policy-defined response

Enriched with threat intel

to identify known-bad actors (e.g., DPRK IT workers) and tools used in the wild by impersonators

Identity-focused visibility

to map a deceptive identity’s impact across meetings and users to accelerate risk containment

Job Candidate Fraud Resources

Frequently asked questions

Hiring fraud consists of fake job candidates using generative AI tools to create and submit making use of fake resumes, professional profiles and more to get hired under false pretenses. Imposters’ objectives include simply collecting a paycheck, achieving corporate system access, stealing intellectual property, staging malware, or, if they’re found out, extorting the employer or deploying ransomware. Job candidate deception increases risks including financial loss, insider threats, and regulatory and compliance violations.

Remote work exploded as a result of the COVID-19 pandemic, and more and more enterprises also came to realize that remote hiring can be faster, provide a more convenient candidate experience, and open up a larger talent pool.

Because of that accelerated transition, remote hiring processes didn’t receive proper cybersecurity assessments and hardening. In addition, identity verification and management solutions focus on employees or customers rather than job applicants, making screening processes easier to slip through.

Generative AI and deepfakes give fraudsters powerful tools to deceive recruiters, HR staff, hiring managers, and other staff.

- Deepfake job interviews - Fraudulent applicants will use GenAI to impersonate a real human in real time or use face and voice swaps to simply hide their real identity

- AI-generated professional profiles - Adversaries will use GenAI to build synthetic resumes and certifications, or develop or clone LinkedIn, GitHub, and other profiles

- Forged IDs and documentation - With AI tools, attackers can edit or create convincing forged government IDs and other documentation

- Fraud campaigns at scale - GenAI allows a single individual to generate numerous, slightly different identities allowing them to apply for numerous jobs, multiple times

The majority (76%) of hiring managers struggle with assessing candidate authenticity due to AI. HR teams can’t rely on their intuition or legacy screening methods to detect candidate fraud.

While adversarial nation-states have long planted operatives as spies in U.S. corporations, not all candidate fraud is tied to North Korea for example. Job candidate fraud takes different forms and takes place at different points in the hiring lifecycle, such as:

- Impersonation - Hired under stolen, borrowed, or synthetic ID

- Proxy / stand-in interviewee - Someone else interviews for the candidate

- Fake references - Co-conspirators impersonate references

- Overemployment - The candidate conceals concurrent jobs (polyworking)

- Signing bonus theft - The candidate accepts the bonus and never shows up

Hiring an imposter or fake candidate isn’t only a matter of a bad employee fit. It increases your organization’s risk and costs.

- Cybersecurity: Imposters are an insider threat that can move laterally, harvest credentials, exfiltrate data, and install backdoors

- Financial: An imposter’s goal may be payment fraud or they may extort the company if found out

- Operational: Wastes recruiting and onboarding resources, damages employer brand, and requires costly re-hiring

- Legal & Compliance: Fines, breach of contract, breach notifications

- Intellectual Property: Theft of trade secrets, proprietary code, and misuse or co-option of brand assets

- Reputational: News of a fraudulent hire may breed doubt in customers, employees, and investors

Workplace Safety: Imposters may harass staff or delay emergency response if they don’t reside where they claim to live